In a galaxy quite close to home…

Regular readers should be encouraged to discover that our adherence to this great tradition has not wavered, as we not only enjoy some advent fun in the run up to the Christmas holidays, but also remember a good friend and colleague, despite being gripped by the closing in of the dark side – until Spring at least.

The rules remain the same, each day one door will be opened, and one brave Jedi will do battle with fiddly little pieces of plastic, and then triumphantly append a photo of their finished masterpiece to this post.

We’ll be nagging team members to update this blog post daily with their latest lego adventures (with possible delays over the weekends). The first update should appear very shortly…

1st December 2016 (Adrian)

Many many aeons after Jango Fett had stolen the patrol craft and taken it as his own and named it Slave, a strange event occurred. A new venture to explore the outer reaches of the galaxy was about to commence. Then, out of the early frosty and wintry morning a new ship appeared. But not one of these new-fangled jobs. It was an absolute clone of the Slave. Where it came from, no-one knew. But it was seen hovering in front of a set of old blue-prints of the Slave, and the myth arose that it had spontaneously auto-reassembled from thin air. It henceforth became known as henceforth-the-auto-reassembled.

2nd December 2016 (Alex)

This poor Bespin guard bursts from his plastic and cardboard prison, takes a moment to compose himself — luckily he is of few parts — and looks around. This was not Bespin, as evidenced by there being a ground to stand upon. Turning, he discovers an abandoned patrol craft and wonders whether he might fit inside. Thinking it unlikely and unsure of what else to do, he resolves that he should do what he knows best. He stands and guards it, waiting.

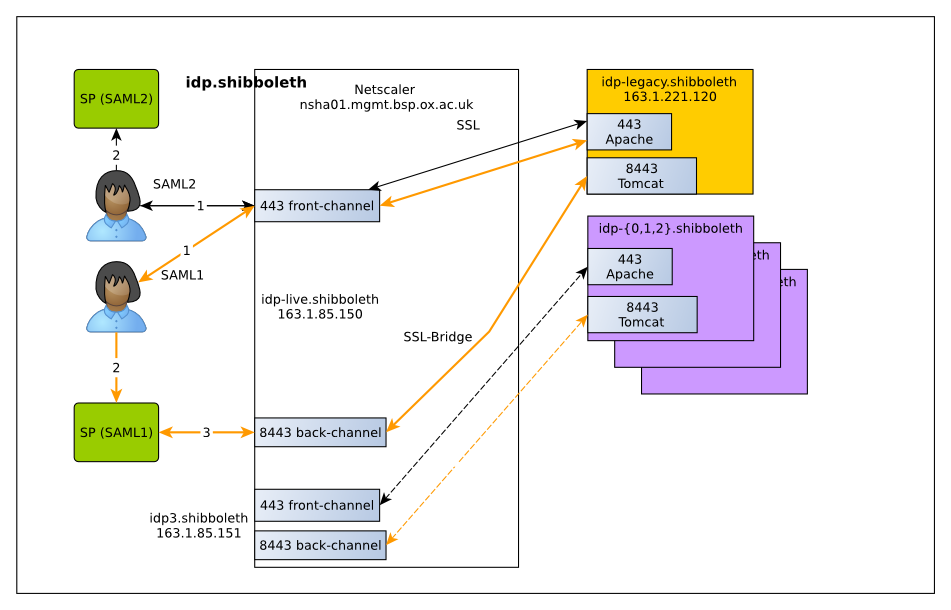

3rd December 2016 (Christopher H)

4th December 2016 (ChrisF)

A passing Imperial Navy Trooper swaggers on to the scene and sees a bored looking Bespin Guard who seems to have developed a fondness for a piece of nearby space junk. Naturally the Bespin Guard takes offence at this description of the recently discovered patrol craft. Honour must be satisfied!

So, the duel begins… Ten paces, turn and vapourise! But who will be the victor?

5th December 2016 (Chux)

Trying to make the most of the temporary refuge which Echo Base had become, General Rieekan orders for the installation of DF.9 gun turrets at vantage points around the base.

This particular DF.9 was hastily installed to contain a clutch of Imperial AT-AT walkers and ground troops spotted making a bee-line for a particularly precious Christmas tree.

And just in time too, the gun turret helps defeat the Imperial forces and saves Christmas at Echo Base!!!

6th December (Dameon)

And now there are three of them, it turns from a casual duel, to an official Mexican standoff!

And now there are three of them, it turns from a casual duel, to an official Mexican standoff!

“Get off my snowfield, Bespin scum!” yells the Snow Trooper, “I don’t even know what a Mexican is!”

“I don’t think we have them in this far far away galaxy” replies the Navy Trooper, before turning his blaster on the cloud-city native, all the while keeping one eye on the distant gun turret.

7th December (Jim)

Olaf the Snowtrooper Snowman has just come down the mountain.

Olaf the Snowtrooper Snowman has just come down the mountain.

“But where are Anna and Kristoff? We were due to go up the mountain to find Elsa!”

“And which bastard took my stick arms?”

He then looks to his left and sees the HX400-UT Imperial Blaster Cannon and ejaculates:

“Bollocks! I’m in the wrong movie!”

8th December (Dave)

A resupply mission gives the Mexican standoff a turn to the unfair when the Imperial Navy Trooper finds himself armed with a dish canon.

A resupply mission gives the Mexican standoff a turn to the unfair when the Imperial Navy Trooper finds himself armed with a dish canon.

“Feel the POWER of MY Force! Yeah, baby! This is what I’M TALKIN’ ABOUT!”

“Will? Will Smith? Is that you?” asks the Bespin Guard.

“CUT CUT CUT CUT CUT! screams the director. “Will you guys PLEASE stick to the script? You ain’t the Fresh Prince of nothin’ out here and your ad libs ARE NOT better than the lines we gave ya. Okay, ready people? Let’s make some MAGIC today! From the top, ACTION!”

9th December (DR)

Where the hell did I put my goggles?

Where the hell did I put my goggles?

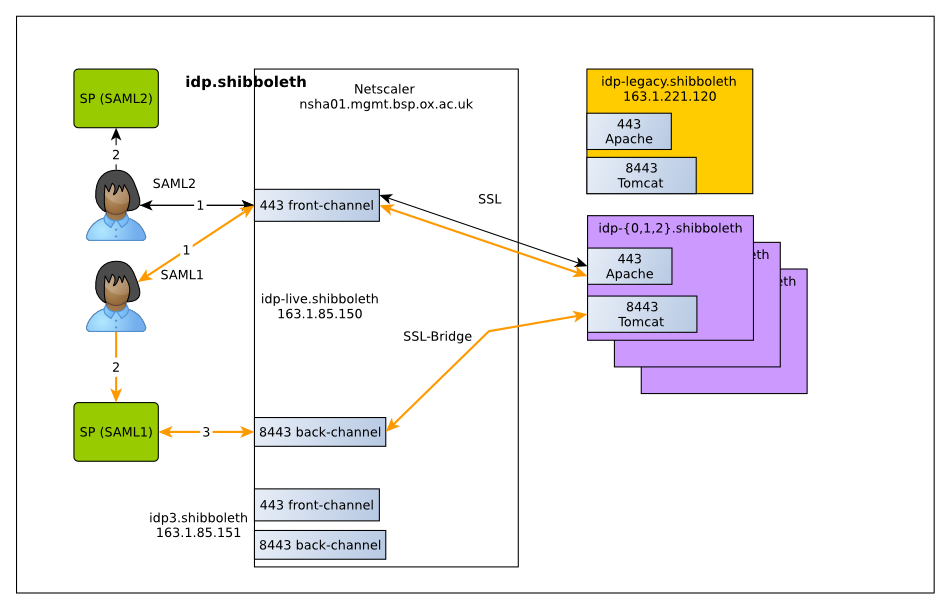

10th December (Jim)

Newly assigned to his post as Admiral of “Constipation”, the Galactic Republic’s new Venator-class Star Destroyer, Lar Jarse, stood on the bridge and surveyed the life teeming on the planet below.

Newly assigned to his post as Admiral of “Constipation”, the Galactic Republic’s new Venator-class Star Destroyer, Lar Jarse, stood on the bridge and surveyed the life teeming on the planet below.

“Well, we can’t have this! All these creepy-crawly things squirming around to no good! It just isn’t British!”

As his crew desperately scrambled for their scanners, the new Admiral vociferated and the unfortunate planet’s fate was sealed.

“Unleash the death-ray!”

11th December (Julian)

Back on the other nearby planet an Armoured Assault Tank swings into view. Its clone pilots are briefly confused not to see the Gungans that they were originally pursuing. Ah, well lets take out that Bespin Guard first and then see whether we get any further orders from our leader – hang-on maybe that carrot nosed trooper is our leader?

12th December (Ken)

“Mayday! Mayday! Mayday!”

13th December (Robert)

“Hold it right there!” yelled the battle droid, as it came across Olaf. “Give me the battle cannon or else!”

“Hold it right there!” yelled the battle droid, as it came across Olaf. “Give me the battle cannon or else!”

Olaf sighed, and continued to stare into the distance as he wondered just where he had ended up. “Just let it go…” he muttered…

14th December (Michael)

As Obi-Wan takes a sharp right around the festively-decorated turret, he idly wonders how he’s being persued by a ship that wouldn’t be invented for many years. The magic of Christmas?

15th December (Dameon)

“What do you mean ‘The damage doesn’t look so bad from out there’?” exclaims the increasingly pessimistic Captain Antilles.

“What do you mean ‘The damage doesn’t look so bad from out there’?” exclaims the increasingly pessimistic Captain Antilles.

“‘Oh, there’s the Tantive IV’ they say, ‘Let’s pull them over and check their systems for secret plans again. That’s always a good laugh’ … damned imperial speed cops, don’t they have anything better to do with their time?”

“Oh well, brace for impact … again…”

16th December (Robert)

Meanwhile, E-3P0 was admiring the Empire’s latest Star Destroyer, “Constipation”. Whilst he was well aware of its ability to destroy entire planets with a single shot, from here it looked small; almost insignificant compared to some of the other ships he had seen lately. Such a powerful ship deserved a name with distinction, a name with gravitas. “Constipation”? That has zero gravitas, he thought.

Meanwhile, E-3P0 was admiring the Empire’s latest Star Destroyer, “Constipation”. Whilst he was well aware of its ability to destroy entire planets with a single shot, from here it looked small; almost insignificant compared to some of the other ships he had seen lately. Such a powerful ship deserved a name with distinction, a name with gravitas. “Constipation”? That has zero gravitas, he thought.

17th December (Stu)

A GNK Power Droid arrives to charge up the parked Starship Enterprise*. “It’s a lot smaller than it looks on the telly.”, it gonks.

[* Pesky wormholes, mixing up the universes.]

18th December (Adrian)

Of course, all along Jabba (the Hutt) had been up in his palace atop Mt Pannatooine, observing the arrival of lots of goodies for the plunder down in the valley below. He was licking his slobbery chops and anticipating a good festive season. What he didn’t know though, was that his musings were to be rudely interrupted by a swarm of raisin-bots which had scaled the steep cliffs and were intent on some plundering of their own.

Of course, all along Jabba (the Hutt) had been up in his palace atop Mt Pannatooine, observing the arrival of lots of goodies for the plunder down in the valley below. He was licking his slobbery chops and anticipating a good festive season. What he didn’t know though, was that his musings were to be rudely interrupted by a swarm of raisin-bots which had scaled the steep cliffs and were intent on some plundering of their own.

19th December (Alex)

Luke who it is! The Death Star Trooper tries to take aim but finds himself lifted into the air as the protocol droid looks on. Better style this one out, he thinks. “Thanks, Matilda!” says Luke to the small girl hiding in the Gonk droid.

20th December (ChrisF)

Fast forward… and our once heroic Imperial Navy Trooper, having eradicated every last trace of Rebel nonsense in the neighbourhood (especially that Bespin rogue with poor dress sense) is now bored witless and is reduced to handing out on the spot fines to illegally parked Desert Skiffs for a living. It was either that or a job grilling rontoburgers at the local “StarChow! All the nutrients you can chew, suck or absorb for only 99 Imperial Credits!”

21st December (Christopher H)

Lounging on his Sun-bed, the tired soldier saw an approaching airborne object. “Is it a bird? Is it a plane? I’ll shoot it down anyway he thought”.

22nd December (Stu)

An Imperial Sentinel Class Landing Craft cruises around with Luke roof-surfing, before doing what it does best, and landing. “Look at me! Olaf. Look at me! Olaf! OLAF! YOU’RE NOT LOOKING!!”

23rd December (Nigel)

Having not only gained Olaf’s attention, but also an impromptu lecture about how “Star Wars” is “so last millennium, baby” and the future is in Ice and crossover movies, “like, um, ‘Star Wars Frozen'”, Luke trades his father’s lightsaber for a pair of ice skates and a hockey stick, determined to hone his Jedi Hockey skills (puck telekinesis, anyone?) for the musical “Disney On Ice: Star Wars” that Olaf makes him certain is just around the corner.

Having not only gained Olaf’s attention, but also an impromptu lecture about how “Star Wars” is “so last millennium, baby” and the future is in Ice and crossover movies, “like, um, ‘Star Wars Frozen'”, Luke trades his father’s lightsaber for a pair of ice skates and a hockey stick, determined to hone his Jedi Hockey skills (puck telekinesis, anyone?) for the musical “Disney On Ice: Star Wars” that Olaf makes him certain is just around the corner.

Feel the freeze, Luke, feel the freeze.

24th December (Dave H)

The Albino Chewbacca is decked with festive decoration – his bandolier is painted red and green. He comes with a snazzy new bowcaster which fires off snowballs (1×1 studs) which is always a great weapon to have. He also comes with two miniature pine trees and some spare snowballs.

The Albino Chewbacca is decked with festive decoration – his bandolier is painted red and green. He comes with a snazzy new bowcaster which fires off snowballs (1×1 studs) which is always a great weapon to have. He also comes with two miniature pine trees and some spare snowballs.

A great way to finish the 2016 Star Wars Advent calendar.

Happy Christmas!